Some Cisco Nexus Design Considerations

By stretch | Monday, May 7, 2012 at 2:45 a.m. UTC

I've been involved with a moderate datacenter deployment of Cisco Nexus switches over the past couple months, and I have learned a good deal about the architecture along the way. Here are some of the design considerations I've encountered, and my preferred solution to each.

Drawing the Layer Three Boundary

I work for a managed services provider which offers datacenter colocation, including private line and VPN connectivity to customer sites. One of the primary requirements for network design in this field is to maintain strict customer delineation across all segments of the network. The typical go-to tools for traffic isolation are IEEE 802.1Q VLANs at layer two and MPLS/VRFs at layer three. It is preferable to extend layer three (MPLS) forwarding as close to the datacenter edge as possible, to take advantage of the flexibility and inexpensive redundancy offered by equal-cost multipath routing.

Unfortunately, the only model in the Nexus switch series which supports MPLS is the 7000 (and that wasn't until NX-OS 5.2 was released just last year). (The Nexus 5500 series offers IP-only layer three functionality with an upgraded daughter card.(This is disappointing, because it necessitates deploying expensive Nexus 7000 chassis as access switches if you need to have MPLS at the edge. Otherwise, you're stuck with an extended layer two topology by way of Nexus 5000s.

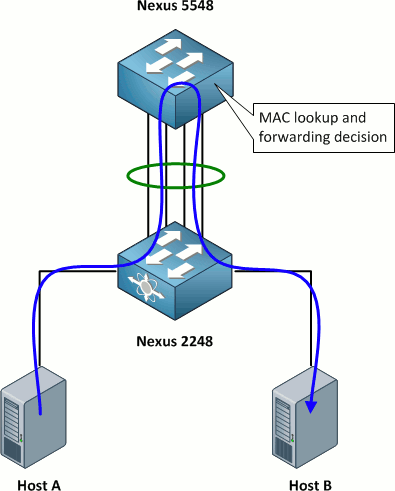

Nexus 2000s Don't do Local Forwarding

A Nexus 2000 series switch, often referred to as a fabric extender or FEX, relies entirely upon its parent Nexus 5000 or 7000 switch for all forwarding. Using the topology below as a reference, for host A to send a packet to host B, that packet must be sent up to the parent Nexus 5000 for a forwarding decision to be made and then back down to the FEX.

For this reason, it is critical to provide plenty of throughput to downstream FEXes; 20 Gbps for 24 GE interfaces and 40 Gbps for 48 GE interfaces are the recommended budgets. This effects a slight oversubscription ratio of 6:5, or 1.2:1. Provided that each FEX is physically located within a few racks of its parent switch, 40 Gbps can be delivered relatively cheaply using Cisco-proprietary fabric extender transceivers.

Static FEX Interface Pinning

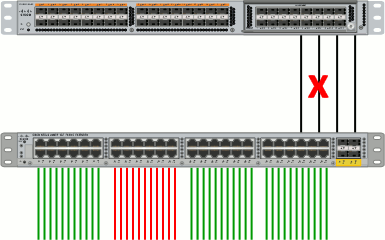

Speaking of FEX interface oversubscription, NX-OS provides a mechanism called static pinning to maintain deterministic oversubscription ratios in the event of a fabric uplink outage. Assume we connect a Nexus 2248 to its parent 5500 using four 10 Gbps Ethernet uplinks bonded into a single 40 Gbps port-channel. The default behavior of the FEX is to make available the entire 40 Gbps to all ports at any time, resulting in the aforementioned 1.2:1 oversubscription ratio on a 48-port FEX.

If one of the links in the port-channel fails, the aggregate uplink bandwidth is reduced to 30 Gbps (ignoring port-channel load-balancing concerns) and the oversubscription ratio increases to 1.6:1. Should two uplinks fail, the oversubscription ratio jumps to 2.4:1. This is normal behavior for a typical Ethernet switch.

The static pinning feature allows groups of access interfaces to be bound to particular fabric (uplink) interfaces with the command pinning max-links. If a fabric interface fails, its pinned access interfaces are automatically shut down. This of course disconnects whatever devices are attached to those interfaces, but it preserves the oversubscription ratio for the remaining interfaces.

The implication of shutting down a group of interfaces on the switch is that the connected devices are dual-homed to another switch as well, in the B fabric, and will begin using their secondary uplinks.

Fabric Extenders: Single- or Dual-Home?

A Nexus 2200 can have all its uplinks to a single Nexus 5500, or its uplinks can be split between two upstream 5500s. This second option might seem appealing on the surface, but it presents a more complex design and imposes several critical limitations. First, dual-homed FEXes do not support dual-homed hosts in active/active operation, e.g. a LACP port-channel (active/standby is fine, though). Second, both upstream 5500s must be configured identically to share the downstream dual-homed FEXes.

Personally, I don't see any practical advantages to dual-homing a FEX. Given that a FEX is, essentially, a remote line card of an upstream switch, and we (should) already have redundant upstream switches, there is no need for FEX uplink redundancy to multiple parent switches.

Building Virtual Port-Channels (VPCs)

Virtual port-channels, also refered to as multichassis Etherchannel (MEC), allow a single downstream switch to form a port-channel to two upstream switches. This is similar to a pair of Catalyst 6500s joined as a Virtual Switching System (VSS) pair, except that both upstream Nexuses function and are managed as independent devices. A VPC can be formed not only in the upstream direction but downstream as well, resulting in one massive port-channel among two aggregation switches and two access switches in a grotesque orgy of redundant layer two connectivity.

The verdict is still out on this one, as I haven't yet had any experience configuring a VPC in a production environment. While the aspect of eliminating spanning tree-blocked links in the datacenter in very appealing, much careful attention to the configuration of the upstream switches is necessary to keep everything running smoothly.

Allocating Virtual Device Contexts (VDCs)

Virtual device contexts are to the Nexus 7000 what virtual machines are to VMware ESXi: discrete autonomous systems which are independent from one another but which share common physical hardware. The Nexus 7000 supports up to four VDCs, one of which is the default VDC. Physical interfaces are assigned among the different VDCs, which are administered independently. One VDC can be rebooted without affecting the operation of the others.

VDCs are an intriguing feature, though with a limit of four per chassis, their potential is fairly limited. They are probably best suited to serve different operational domains; for example, one non-default VDC each for development, production, and operational networks.

Posted in Data Center

Comments

May 7, 2012 at 2:51 a.m. UTC

It's now possible to vPC to the host with dual-homed FEXes. EvPC fixed that limitation (and added FCoE to dual-homed FEXes as well).

IMO, there is a great need to dual-home FEXes. Without it, you MUST vPC down to the host or do some dirty stuff if you're running active/passive on the host. One downside worth noting is that you are limited to only 12 FEXes per 5K pair when dual-homing. This is a significant decrease. We were able to go above this in the lab, but it's not supported according to the docs.

May 7, 2012 at 6:26 a.m. UTC

vPC to the end device works from my experience as well as vPC in general. But similar to VSS it's recommended to implement out-of-band management for Nexus switches, otherwise one could become completely unreachable in case of vPC incosistencies.

May 7, 2012 at 11:37 a.m. UTC

Thanks for taking the time to post stuff like this. As someone who recently completed the CCNA and is now working on the CCDA, reading stuff like this allows me to have a peek into bigger and better things to see what's going on :-)

May 7, 2012 at 3:41 p.m. UTC

Colby, note that the 12 FEXes limit is only for the first generation N5ks (5010, 5020). For 5548 and 5596 the limit is 24 as said in the doc you linked.

May 7, 2012 at 5:48 p.m. UTC

Markku,

Where exactly are you seeing that in the doc? This is great news, but I can't seem to find it. Is it just because it says "5000 Platform" instead of "5500" on that table? You think that's definitive?

Thanks.

Edit: Nevermind. I see it now. This is great to know.

May 7, 2012 at 7:13 p.m. UTC

We have got around the static pinning restriction by using a PortChannel as the uplink to the 5K, this way it allows the links to dynamically adjust to the new oversub ratio rather then putting 12 customer links in the dark in the case of a link failure.

This is obviously only an issue if you have single homed connections, if you have dual homed connections its not so much an issue as you have stated as it can use its other uplink to the other FEX.

May 8, 2012 at 12:51 a.m. UTC

Great article. I very much agree with your sentiment in re not dual-homing FEXs. Countering Colby's (commenter) argument, I'd say that vPC or some other host-based link redundancy (into disparate switches) is preferred. Dual-homing FEXs leaves a single point of failure. Ex. Lose the FEX, you lose your server.

May 8, 2012 at 1:47 a.m. UTC

C. Hayre, you appear to have misunderstood my argument. I'm surely not advocating single-connected servers. That will ALWAYS be a single point of failure.

My point is that you are limited in how you can connect hosts when single-homing FEXes. You should not run active/passive to single-homed FEXes. Again, this is an orphan-port scenario, yadda yadda. If you don't understand why that is bad, read up on vPC some more.

Dual-homing FEXes gives you flexibility at the host level. You can vPC or run active/passive without worrying about orphan-ports. In the end, it's a better, more flexible design, and it's recommended unless ALL of your hosts are a) dual-homed (which we all agree they should be) and b) vPCed.

May 10, 2012 at 2:08 a.m. UTC

you also have to remember when you single home a FEX and are running active/passive on your servers (connected via 2 different single homed FEX's) If you don't lose your uplink then your server will not failover to the other FEX should you somehow lose your uplink to the 5K but not the FEX itself. If you are dual homed to the FEX then this should happen. My .02 cents.

May 10, 2012 at 2:31 p.m. UTC

FYI, there are new supervisor modules coming out this summer that will expand the 4 VDC limit to 6 VDC's and another one coming out later this fall that adds 2 more for a total of 8 VDCs per 7K.

May 10, 2012 at 4:08 p.m. UTC

Just a little information for VDC and FEX. One FEX can only be into one VDC. This is due to the uplink on the N7K module : N7K-M132XP-12L.

Aymeric

May 11, 2012 at 9:15 a.m. UTC

Hi guys,

Dual home FEX has some limitation as far as I know - because the fex is not a switch - when you do dual home design you can't do port channel down to host - you may only do some active/passive teaming. If you only can have active/passive why would you use dual home fex? if you have only a link from the host to the fex and the fex fails then the host is still down.

In a nexus course i've taken about 2 weeks ago the instructor said that in this dual homed scenario a failure in n5k can have disruptive effects on the traffic (for about 30-45 seconds) - I plan a test to see what could happen.

of course every design is different - I believe that single home with port channel to host is the best scenario, but dual home may be used if you need more bandwidth between fex and 5ks and you are not concerned with a fex failure.

Also if you have some big vPC scenario - maybe FabricPath would be a better solution.

May 11, 2012 at 8:28 p.m. UTC

Bogo, you CAN do vPC to the host as of the Enhanced vPC update in a dual-homed FEX topology, as posted above. Also, in my testing, there has been no such disruption when failing between 5Ks. Lots of misinformation being posted.

Randy, yes, you're describing an orphan port scenario, which is a big reason to dual-home your FEXes. It gives you the flexibility to run active/passive OR vPC to your hosts. When you single-home your FEXes, you MUST run vPC to the hosts, or use 'orphan-port suspend', which can have some quirks of its own.

May 24, 2012 at 1:57 p.m. UTC

@Randy, any fex losing uplink, or any N5596 losing VPC (due to L1 inconsistency etc) would shutdown any relevant port, so even though the FEX is powered, your server will lose that connectivity.

I'd still go with active-active dual-homed FEX design, and dual-homed servers with E-VPC, but just wanted to clarify that...

Nice article overall...

May 27, 2012 at 10:04 a.m. UTC

sorry if you think that its stupid notion but why then we need N2K when these switches are dependent. Its better we connect standard Cisco switches available at much cheap price to reap more benefits.

May 30, 2012 at 11:39 a.m. UTC

@CiscoFreak, when you connect standard switches, you'll have to deal with configurations manually and also Spanning-Tree would/might become a problem. And, when you think of a DataCenter and the possible number of "racks", the problem "might" get bigger.

There comes the advantage of a Fabric Extender, which typically is just a remote version of a line card (as in a modular switch)...

April 30, 2014 at 8:03 p.m. UTC

cool stuff

May 5, 2014 at 7:51 a.m. UTC

I have been reading into this as we have a client who we are deploying N5K and N2K.

I'm looking to have the following design:

2 N2K, one of which will be uplinked to each N5K with 4x 10Gb links in Port-Channel and the second one with same configuration.

The host will attach to each FEX and have a LACP active/active setup.

Is this something which is supported with EvPC, as there is some confusing information out there?

Thanks.