The Value of a Microsecond

By stretch | Wednesday, April 2, 2014 at 12:36 a.m. UTC

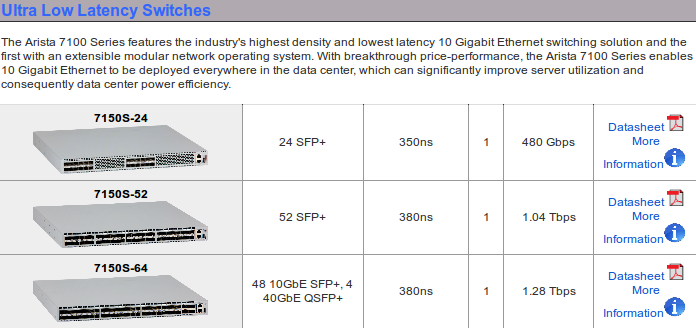

While perusing vendor datasheets, have you ever questioned the inclusion of seemingly insignificant latency specifications? Take a look at Arista's line-up, for instance. Their 7500 series chassis lists a port-to-port latency of up to 13 microseconds (that's thirteen thousandths of a millisecond) whereas their "ultra-low latency" 7150 series switches provide sub-microsecond latency.

But who cares? Both values can be roughly translated as "zero" for us wetware-powered humans. (For reference, 8,333 microseconds pass in the time it takes your shiny new 120 Hz HDTV to complete one screen refresh.) So, does anyone really care about such obscenely low latency?

For a certain few organizations involved in high-frequency stock trading, those shaved microseconds can add up to billions of dollars in profit. The New York Times recently published an article titled The Wolf Hunters of Wall Street by Michael Lewis, which reveals how banks have leveraged low network latency to manipulate stock prices in open markets. (Thanks to @priscillaoppy for the tip!)

The increments of time involved were absurdly small: In theory, the fastest travel time, from Katsuyama’s desk in Manhattan to the BATS exchange in Weehawken, N.J., was about two milliseconds, and the slowest, from Katsuyama’s desk to the Nasdaq exchange in Carteret, N.J., was around four milliseconds. In practice, the times could vary much more than that, depending on network traffic, static and glitches in the equipment between any two points. It takes 100 milliseconds to blink quickly — it was hard to believe that a fraction of a blink of an eye could have any real market consequences.

Essentially, if party A had lower latency to several exchanges than party B, party A could take advantage of the lag time between exchanges when party B places a large order, buying up shares before party B's order completes and inflating the stock price slightly within milliseconds. In computer science, we know this as a race condition. Multiplied by volume and time, these independently negligible gains could generate billions in profit for high-frequency traders.

In order to create a new stock exchange that was immune to such race conditions, the subjects of the article figured out that they needed to ensure that none of its connected customers were "closer" (with regard to latency) than the furthest peer exchange:

The necessary delay turned out to be 320 microseconds; that was the time it took them, in the worst case, to send a signal to the exchange farthest from them, the New York Stock Exchange in Mahwah. Just to be sure, they rounded it up to 350 microseconds. To create the 350-microsecond delay, they needed to keep the new exchange roughly 38 miles from the place the brokers were allowed to connect to the exchange.

As moving their point of presence 38 miles outside the metro area wasn't an attractive prospect, their solution to the need for artificial latency was surprisingly low-tech:

A bright idea came from a new employee, James Cape, who had just joined them from a high-frequency-trading firm: Coil the fiber. Instead of running straight fiber between the two places, why not coil 38 miles of fiber and stick it in a compartment the size of a shoe box to simulate the effects of the distance. And that's what they did.

Yep, they connected peers through a 38-mile spool of fiber mounted in the rack. The photo from the article appears to be of a Corning Optical Network Simulation System (brilliant marketing for "a big ol' spool of fiber").

So as it turns out, microseconds can matter very much in some cases. A strange concept to wrap one's head around, but it really highlights just how much faster than us our networks are.

Posted in Hardware

Comments

April 2, 2014 at 4:17 a.m. UTC

Yep, the marketing name means they get to charge more (which they do) ;-)

April 2, 2014 at 12:19 p.m. UTC

How curious that technology is more important for people who understand it less! Great Article!

April 3, 2014 at 6:53 p.m. UTC

I was just watching a clip from the NYSE and was shocked to see dozens of trading floor PC's still running the default desktop for Windows XP... Hard to believe these are the same people who actually created a need for such a device.

April 3, 2014 at 9:21 p.m. UTC

FYI, Those people on the trading floors aren't the ones taking advantage of this service. We're talking FPGA NIC powerful computers here.

April 3, 2014 at 11:12 p.m. UTC

I worked as a network architect for a couple years in the oldest stock exchange in the world, the NYSE / EuroNext exchange in Amsterdam. And just as you have mentioned, all of the high-frequency arbitrage firms were in fact gearing up with Arista devices in an arms race to shave microseconds. As mentioned, it is not the terminals themselves that are doing the heavy lifting, but instead the terminals are loading predictive algorithms onto ultra low-latency servers which are co-located in the various exchanges around Europe. The Arista switches are simply facilitating the low-latency transactions from the firms applications to the exchange POP locally. The switches performed beautifully, and the level of support we received from Arista was good. Word of warning - if you are keen to deploy this sort of low latency switching, don't expect to find a slew of other features found on your Cisco and Juniper devices - GRE? Nope. Static NAT? Nope. Do your research and understand your use case before investing in new kit.

Great article Stretch, keep up the good work.

April 4, 2014 at 2:47 p.m. UTC

You should check out Junipers switches they were chosen for the NYSE Euronext Project because the multicast latency was bellow 10 microseconds and the complete switching operation from in to out was less than 7 microseconds.

http://www.networkworld.com/reviews/2008/071408-test-juniper-switch.html

April 4, 2014 at 5:50 p.m. UTC

Disclaimer: I work for Cisco.

@bufo333 - 7 microseconds = 7000 nanoseconds. That’s pretty slow compared to today’s crop of Low Latency switches. In fact, switches in the Cisco Nexus 3500 Series are faster than the Juniper switches you mentioned and the Arista 7150S Series switches that Jeremey mentioned in the article.

In normal mode the average L2/L3 latency through the Nexus 3548 is approximately 250ns. In Warp mode the average latency drops to approximately 190ns.

@Brian K - Some features are being added to the current generation of low latency switches. For example, the Nexus 3500 Series supports NAT, both static and dynamic.

April 8, 2014 at 3:18 p.m. UTC

You can also consider the effects on military networks. For a few milliseconds you can miss the target...

April 29, 2014 at 1:29 a.m. UTC

@Michael Bonnett, Jr - Are the nexus 3k series using the Broadcom Trident II chipset that the QFX5100 uses? I'm guessing using the nexus 3k warp mode to acheive 190ns latency times is a cut through mode.

October 13, 2014 at 5:38 a.m. UTC

190ns is currently the winner via Cisco's custom ASIC. Services have been added slowly but surely, although manually adjusting TCAM memory was needed to avoid crashing the switch. Many use cases have presented themselves beyond equities. FPGAs are better, however programmers for them are hard to find and expensive.

Warp Span gets traffic inbound and out at 50ns (taking price in and shooting it out to 1-4 hosts). Other options (limited switch, layer 1 only) are exablaze, zeptonics and gnodel. Although, I'm not sure which company is suing which nowadays.